Automatically correlating public campaign contribution data with criminal data to reveal high-risk links.

At Quantifind we believe in the power of combining two datasets to reveal surprising truths – truths which would not be obvious from either dataset alone. Similar to our nearby physicist friends at SLAC (the Stanford Linear Accelerator Center), we are driven by an innate curiosity to smash two things together just to see what comes out.

In our brand work, this involves correlating social chatter to private financial time series to discover consumer signals that are driving sales. In our financial crimes work, we link entities between external open source data (e.g., news feeds) and our client’s internal data to discover which transacting individuals have suspicious red flags.

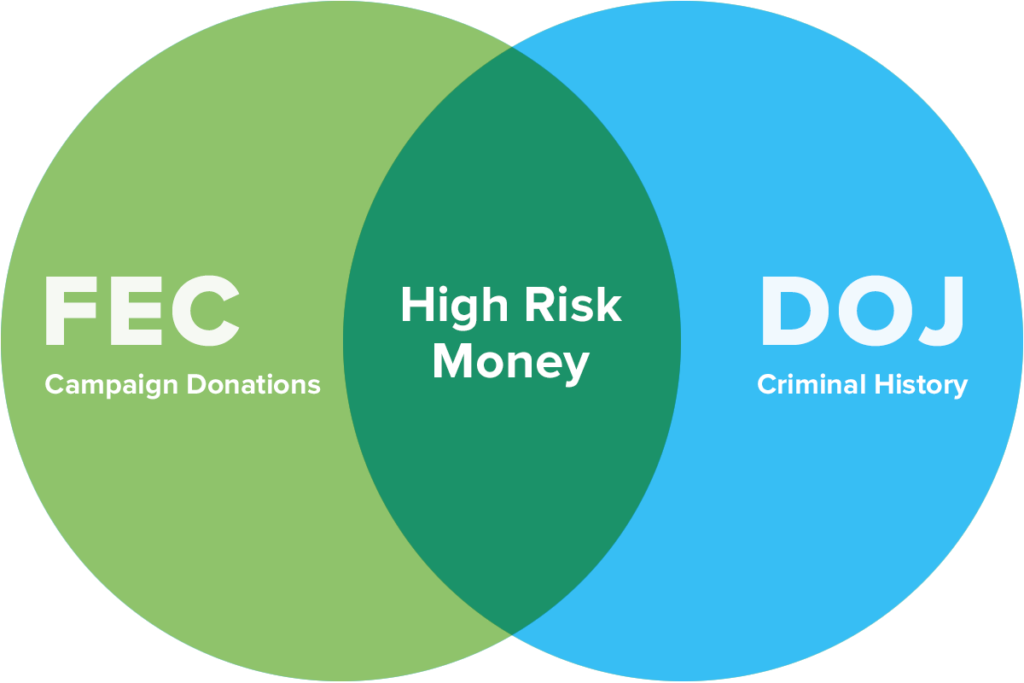

We have many stories from these activities that we simply cannot share because one of these data sets is usually private (either confidential revenue or personal data). However, we can demonstrate our capabilities in certain scenarios where the two datasets both come from public sources. In this post, we will demonstrate our linking intelligence by colliding political campaign contribution data from the FEC (Federal Election Commission) with criminal indictment data from the DOJ (Department of Justice). We can then ask the question: Are there suspected criminals making contributions to a given political campaign?

There is an increasing number of financial data sets (e.g., blockchain, Venmo) that are at least partially open to the public. We chose the FEC contribution data set as analogous example of our usual work for the following reasons. Other than being timely and relevant for certain kinds of criminal activity (e.g., campaign fraud, “pay-to-play” schemes), it offers clean data on the identity of the contributors. Whereas the contributors are analagous to customers of a bank, the campaigns on the receiving end of the contributions (including PACs and individual candidates) are analagous the bank itself. Thus we can help the institution (campaign) determine if they are exposed in a way that may require risk-mitigation. For example, a discovered criminal link may cause them to return a contribution, or “exit” a relationship to be on the conservative side.

On the other side, we chose the public DOJ data set to play the “external” role, because it is a high quality and rich source of criminal entity information. This represents only a small part of our full external data options (which also includes news, forums, public social media, and many structured watch lists), but it serves as a useful a focal point due to its precision and potential relevance.

METHODOLOGY AND RESULTS

As opposed to the FEC data, the DOJ data does not come in a clean structured format. To enable our linking, we first parse these unstructured documents into entities (people and organizations) using Named Entity Recognition (NER), as well as extracting metadata that can assist in the eventual entity linking (e.g., age and location). This process essentially turns the DOJ data into a structured database that sets the table for the linking process. Our linking algorithms then attempt to discover specific individuals who exist in both data sets and score those links with a quantitative confidence level. In other words, a specific donor in the FEC data is automatically identified as the same person as a specific criminal in the DOJ data. Without getting into the details, the confidence level depends upon a number of factors, including name rarity, location proximity, and matching other pieces of metadata and context. By using modern machine learning methods, both the entity recognition and linking can be made significantly higher quality, more robust, and more up-to-date than traditional heuristic methods.

When combining the FEC data from the current election cycle (2017-18) with DOJ data spanning nearly a decade, we get several thousand candidate links. Limiting these to “high-confidence” links reduces this number to several hundred. Several of these high-confidence links are shown in the following table.

| Contributors | DoJ Title | FEC Amount | Timing (FEC was_ DOJ) | Campaigns |

|---|---|---|---|---|

| GERALD LUNDERGAN, DALE EMMONS | Two Indicted For Making Corporate Contributions To U.S. Senate Campaign | $8400, $2310 | BEFORE, BEFORE | redacted |

| ANILESH AHUJA | Hedge Fund Founder, Portfolio Manager, And Trader Charged In Manhattan Federal Court With Mismarking Securities By Hundreds Of Millions Of Dollars | $16,200 | BEFORE | redacted |

| BEN WOOTTON | Owners of Biofuel Company Indicted on Conspiracy and False Statement Charges | $2,500 | AFTER | redacted |

| MATTHEW MCTISH | President Of Engineering Firm Admits To Bribing Elected Officials In Allentown And Reading

| -$200 | AFTER | redacted |

Notice that we have not included the names of the campaigns to avoid demonstrating any unintentional bias. Because we are not sharing the full set of links, any small sub-sample is prone to the impression of bias, and we are only sharing some results to demonstrate our methodology as opposed to dwelling on specifics.

The results in the table above demonstrate the same critical points as we have discovered with our work over private data. Once confidence is established, the next key question is relevance: a link is found, but is it pertinent to the investigation, and should the institution care? What truly constitutes a red flag to a bank, or a campaign, is a long conversation, but suffice it to say that there is a spectrum of risk and thresholds have to be created by working with the institution to make a proper risk assessment strategy. Timing matters: is it more relevant when the criminal event occurs before or after the financial transaction (donation)? Topics matter: campaign finance violations may be more relevant than an arbitrary drug conviction. Realistically, the dollar amount matters as well: the higher the donation, the higher the potential exposure. With some combination of these factors, we can create a prioritization strategy for institutions to assess risks in order of “urgent” to “backburner”. Ultimately, the definition of relevance results from a conversation between the technologists (what is possible to measure) and the clients (what should be measured and what is actionable).

In the specific case of campaign contributions, a key question for assessing relevance is: what is actually illegal? This is a famously evolving area of political discussion, but clear lines exist. Ex-criminals, while an exposure risk, are typically allowed to contribute, but foreign agents cannot. Neither can individuals arrange “straw donors” in ways designed to evade contribution limits. Dead people are not allowed to contribute, but that does not always stop them. While we have focused on the criminal exposure element in this post, we are also able to use similar technology to help identify violations of these hard-and-fast rules.

Finally, if one is interested in “high-risk” areas as opposed to criminal indictments, we can swap out the DOJ dataset with targeted negative news dataset. Similar to the strategy mentioned in our previous post about “Phantom Secure”, we can dynamically create a list of entities associated with a certain topic, and use those to determine which campaigns receive the most FEC donations from these groups. The following table shows political contributors associated with topics.

It should be clear how this process can be generalized to any other pair of data sets. At Quantifind, we incorporate a similar version of this process into our product to help our clients mitigate risk in know-your-customer applications. As the technology to make these connections at scale advances, and as public data sources similar to those mentioned here become available, financial institutions can no longer afford to plead ignorance when it comes to the customers and the transactions they either receive or help facilitate.

If you are interested in learning more, please contact us.